your infrastructure, our runtime

Self-Hosted AI Agents

Deploy inference.sh in your VPC or on-prem. Same runtime capabilities, complete control over your data and infrastructure.

when self-hosting makes sense

Not every team can send data to external services. Regulatory requirements, security policies, or simply the nature of the data you're processing may require keeping everything within your own infrastructure.

But choosing between cloud convenience and self-hosted control shouldn't mean choosing between good tooling and no tooling. You shouldn't have to build agent infrastructure from scratch just because you need to run it yourself.

Self-hosted inference.sh gives you the same runtime capabilities as the cloud platform, running entirely within your environment.

what you control

With self-hosted deployment, you maintain complete control over:

- data residency: all data stays within your network. Agent conversations, tool outputs, and execution logs never leave your infrastructure.

- model selection: use any models you want; commercial APIs, open-source models, or fine-tuned models running on your own hardware.

- network access: define exactly what your agents can reach. Air-gapped deployments are fully supported.

- scaling: size the deployment to your needs, from a single server to a distributed cluster.

- audit and compliance: full access to logs, metrics, and execution history for your compliance requirements.

same runtime, different location

Self-hosted inference.sh isn't a stripped-down version. You get the same core capabilities:

durable execution works the same way. Agent state is checkpointed to your own database, surviving failures and resuming automatically.

tool orchestration connects to tools within your network. Internal APIs, databases, and services are accessible to your agents without exposing them externally.

observability captures the same detail. Traces, logs, and metrics are stored in your infrastructure, queryable through your existing tools.

human-in-the-loop integrates with your approval workflows. Route approvals through Slack, email, or your internal systems.

deployment options

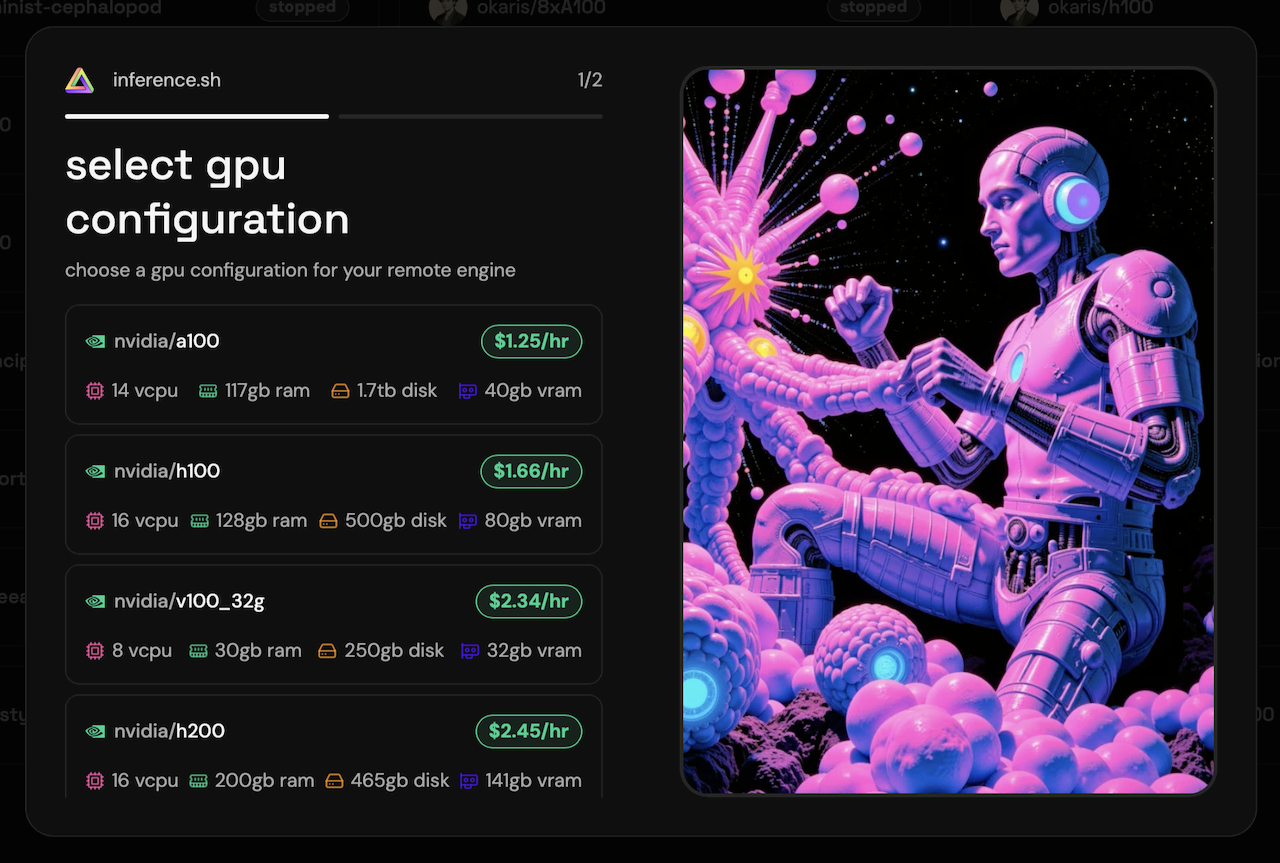

The inference.sh engine is packaged for flexible deployment:

- docker: single-container deployment for development and small-scale production

- kubernetes: helm charts for orchestrated deployments with auto-scaling

- vpc: deploy within your cloud provider's virtual private cloud

- on-premises: run on your own hardware for complete isolation

Documentation covers each option with configuration guides and best practices for production deployment.

the hybrid path

Many teams start with the hosted platform to move fast, then migrate specific workloads to self-hosted as requirements evolve. The same agent code runs in both environments; you're not locked into either.

This means you can:

- develop and test on the hosted platform

- deploy production to self-hosted infrastructure

- use hosted for non-sensitive workloads, self-hosted for sensitive ones

- migrate gradually without rewriting agents

enterprise support

Self-hosted deployments include access to enterprise support:

- deployment assistance and architecture review

- priority support with defined SLAs

- security review and compliance documentation

- custom integration development

enterprise

self-host

deploy inference.sh in your vpc or on-prem for maximum control and privacy.

- keep data inside your network

- bring your own models + infra

- same workspace + api + runtime as cloud

- admin controls, auditability, and enterprise support

we use cookies

we use cookies to ensure you get the best experience on our website. for more information on how we use cookies, please see our cookie policy.

by clicking "accept", you agree to our use of cookies.

learn more.